上回我们说到 RealInterceptorChain#proceed 依次执行拦截器的intercept方法,那我们就逐个看看这些拦截器都是怎么样实现拦截方法的

getResponseWithInterceptorChain

1 | Response getResponseWithInterceptorChain() throws IOException { |

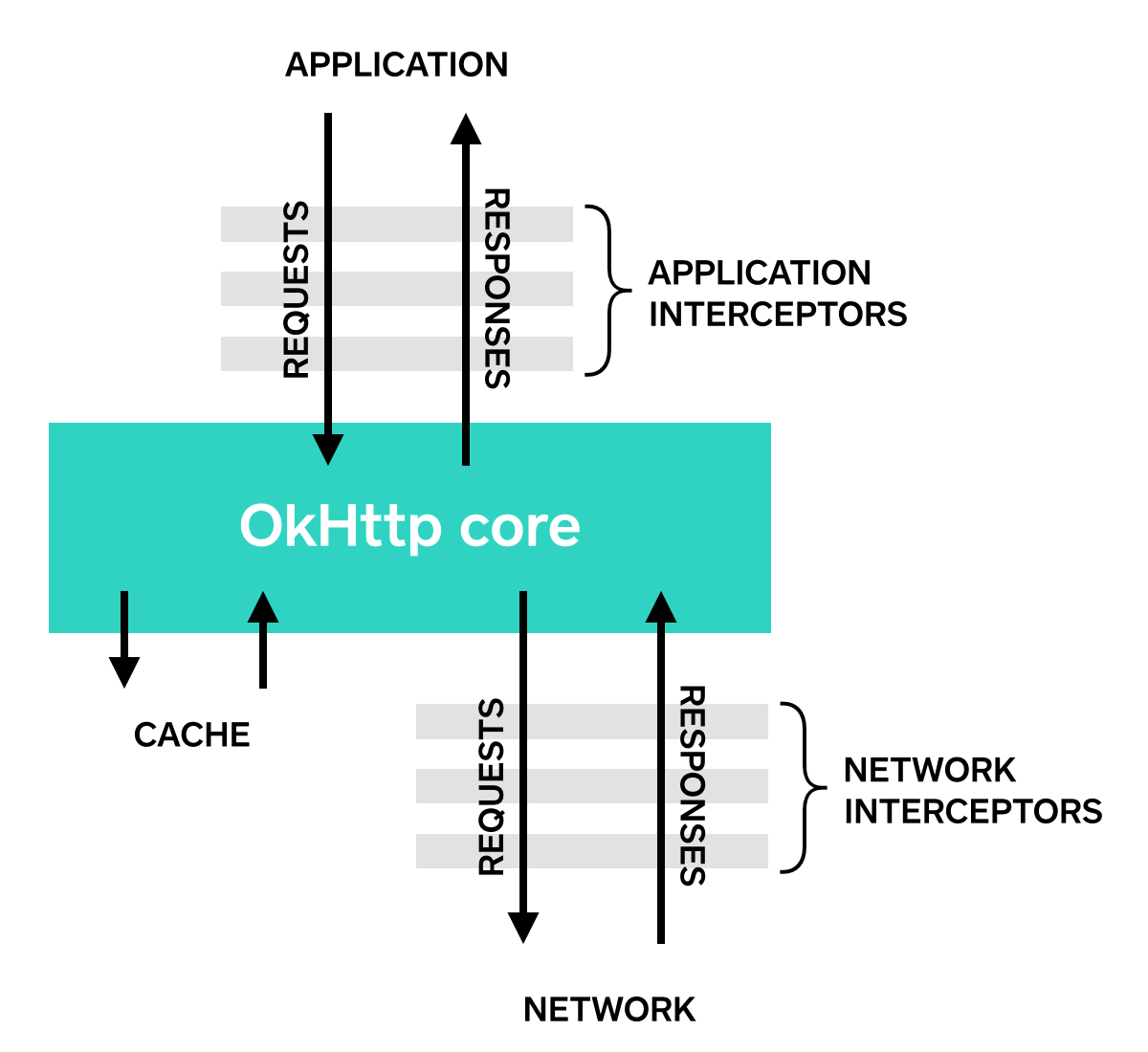

通过代码我们可以知道,拦截器列表里面最先添加的是用户自己定义在OkHttpClient里面的拦截器。看下面这图,没错拦截器有两种一个application级别的,另一个是网络底层级别的。也就是我们现在的这一步只是获取application层的去回调intercept

Request在经历了我们自己写的拦截器后(如果没被处理return响应)会继续向下执行,现在我们就看看这些拦截到底有什么用。

retryAndFollowUpInterceptor

RetryAndFollowUpInterceptor 的作用主要是负责处理网络请求失败后的重试以及请求的重定向跳转。流程如下:

- 通过client的Connection(connection是联通服务器的一条socket连接)池、request创建的Address、call等new出一个StreamAllocation对象,StreamAllocation是

流Streams(socket连接上运输的数据流,HTTP/1.x只允许每个连接上运输一条流,HTTP/2可以多条)分配者。 - 进入循环,获取拦截链逐层允许后返回的Response,通过

followUpRequest(response)根据响应码、重定向Url重新创建重定向Request。如果Request为空代表不给重定向,则释放流分配者streamAllocation,closeQuietly(关闭IO流),抛出Response给上层(也就是execute或enqueue)的Call,否则继续。 - 判断重定向的次数是否>20(大于就释放streamAllocation)、判断请求是否可重复发送、判断是否可重用Connection链接(同域名同端口同协议可重用),跳到第二步继续循环。

1 | public Response intercept(Chain chain) throws IOException { |

retryAndFollowUpInterceptor作为最上层的拦截器,他主要做了重定向的事,这里需要注意的就realChain.proceed 是会把请求交给下层的一堆拦截器逐层处理,做完了才返回Response,和android的Touch事件传递机制有点类似。

BridgeInterceptor

BridgeInterceptor 主要干两件事,补全、处理请求缺省的首部字段、加工底下拦截器返回的响应(setCookie、gzip),我们详细看看:

- 填充请求内容的类型

- 设置请求传输内容大小以及是否分块,注意Content-Length(确定的大小)和Transfer-Encoding(分块)互斥

- 填充 Host、连接Keep-Alive、Accept-Encoding压缩方式默认Gzip、User-Agent用户代理UA

- 放入Cookie,这里注意CookieJar是创建OkHttpClient时传入的

- Build填充好的请求,交给chain.proceed,底下各层拦截器处理完成,返回Respond

- cookieJar保存响应首部的Set-Cookie及对应域名,如果响应用的压缩方式==Gzip,解压响应Body,移除响应首部的Content-Encoding、Content-Length(因为已经解压),Build修改好的响应,抛给上层的拦截器

1 | public Response intercept(Chain chain) throws IOException { |

CacheInterceptor

CacheInterceptor 是缓存,也就是

对于Http的缓存一般有两种:服务端(缓存服务器)和客户端(Web浏览器,App)

- 服务器缓存:缓存服务器会缓存源服务的静态数据,如果在一个缓存时间内有新的请求就直接返回(CDN)

- 客户端缓存:客户端应用程序会缓存自己请求和响应,如果在一个缓存时间内需要相同的数据就从缓存里拿

Cache 是创建OkHttpClient时传入的缓存对象,其内部是用DiskLruCache维护需要需要缓存的响应。

1 | /** The request to send on the network, or null if this call doesn't use the network. */ |

1 | public Response intercept(Chain chain) throws IOException { |

ConnectInterceptor

ConnectInterceptor 的工作很简单,就建立一条Connection与服务器交互连接,由StreamAllocation提供Healthy、没有在使用的连接。有了连接后就交给下层拦截器处理了。

1 | @Override public Response intercept(Chain chain) throws IOException { |

networkInterceptors

这拦截器是由用户自己定义的,如果不是forWebSocket 就逐层执行这些拦截器,在RealCall#getResponseWithInterceptorChain可以看到代码

CallServerInterceptor

呐,这是最后一个拦截器了,它与服务器做了真实的交互

1 | public Response intercept(Chain chain) throws IOException { |